Is The UK's AI Safety Institute Dominated by X-Risk Advocates?

There needs to be full disclosure to avoid regulatory capture by the AI-as-Existential-Risk lobby

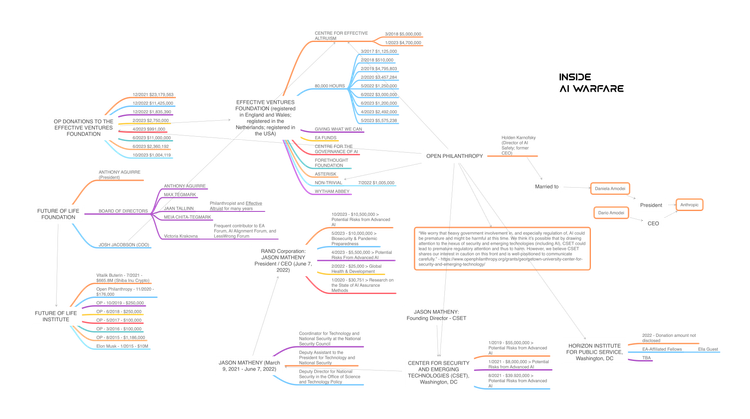

The UK and the U.S. are simultaneously standing up AI Safety Institutes, with the UK having taken the lead. However, there is reason to be concerned about the quality of the guidance that comes from both institutes due to the number of individuals and organizations associated with the Effective Altruism movement and its highly speculative "AI poses an existential risk to humanity" (x-risk) platform.

A diversity of informed opinions is a critical component to providing sound guidance to regulatory bodies and policymakers, and both institutes have acknowledged their intention to achieve that benchmark. Unfortunately, how these institutes go about ensuring diversity hasn't been discussed publicly; at least, not anywhere that I've been able to find, and the initial early appointments from last November are worrisome to me for reasons that should be apparent in the map at the top of this page.

The following is a breakdown of EA and x-risk affiliations of those named in past UK government announcements.

IAN HOGARTH

Ian Hogarth is the Chair of the UK's AI Safety Institute, and he endorsed many of the arguments put forth by Effective Altruists (EA) who focus on existential risk (x-risk) in his April 2023 OpEd for the Financial Times "We must slow down the race for God-like AI." Hogarth was appointed Chair of the UK government's Foundation Model Taskforce in June. By September, the name of the taskforce had removed "Foundation Model" and replaced it with "Frontier AI"; a term invented by the EA/x-risk community to encapsulate a hypothetical future iteration of AI that may see humans as nothing more than raw materials to utilize on its path towards goal fulfillment.

Hogarth has invested in Conjecture, an AI Safety firm founded by Connor Leahy, an x-risk believer and Effective Altruist, as well as Anthropic, whose founders are all EA / x-risk believers.

PAUL CHRISTIANO

Paul Christiano was appointed to the Institute's Advisory Board fairly and his organization is listed as a partnering company. Christiano's ties with Effective Altruism and x-risk run deep. This table of his affiliations is courtesy of AI Watch.

Redwood Research, where Christiano was a board member and director, is one of the corporate partnerships listed with the UK's AI Safety Institute. Not listed above is Christiano's current work at NIST where he leads the AI Safety team, to the distress of a number of NIST employees.

Paul founded the Alignment Research Center, and METR (formerly ARC Evals (another corporate partner of the UK Institute) was spun out of that.

REDWOOD RESEARCH

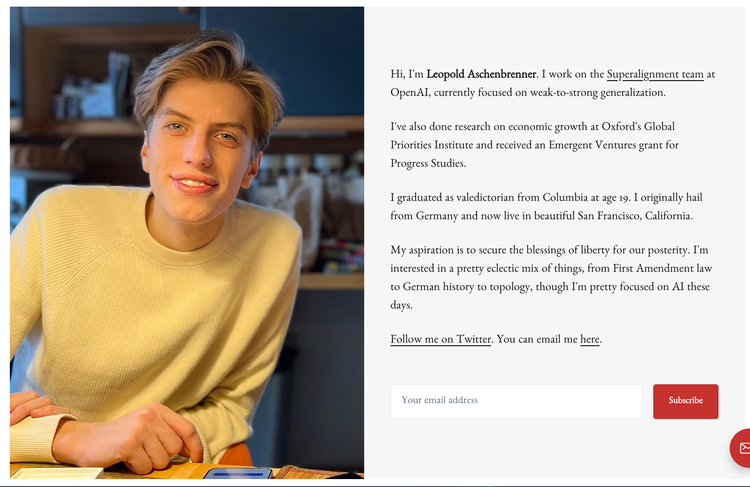

Redwood Research includes both Paul Christiano and Holden Karnofsky, the CEO of Open Philanthropy who's married to Daniella Amodei, a co-founder of Anthropic.

The organization has reportedly had some serious issues including a lack of senior ML researchers, lack of communication with the broader community and serious work culture issues.

Considering that almost $30M has gone to Redwood Research between 2021 and 2023, I found the section of the report regarding personal relationships between Open Philanthropy executives and Redwood Research executives surprising. It makes me wonder if any EA funding organization has a conflict-of-interest policy, or if this is just peculiar to Open Philanthropy.

Two of Redwood's leadership team have or have had relationships to an OP grant maker. A Redwood board member is married to a different OP grantmaker. A co-CEO of OP is one of the other three board members of Redwood. Additionally, many OP staff work out of Constellation, the office that Redwood runs. OP pays Redwood for use of the space.

CENTER FOR AI SAFETY (CAIS)

Founded by Effective Altruist Dan Hendrycks, it has received over $10M in combined funding from Open Philanthropy and FTX in the past two years. FTX has asked for its $6.5M donation back, but CAIS has been non-responsive.

COLLECTIVE INTELLIGENCE PROJECT

Led by Divya Siddarth and Saffron Huang, they are tangently aligned with EA and Divya has contributed to papers on Frontier AI and Existential Risk topics.

RAND

The RAND Corporation has a diverse group of people working on AI Safety, some of whom are firm believers in the existential risk mythos including RAND's President and CEO Jason Matheny as well as scientist Jeff Alstott, who is one of the RAND scientists that's on the advisory board.

GEOFFREY IRVING

Geoffrey Irving was named as an expert AI researcher to the Institute. He's with the Cambridge Centre for the Study of Existential Risk, funded by well-known x-risk figures Jaan Tallinn, Huw Price, and Martin Reese.

DAVID KREUGER

David Kreuger was also named as an expert AI researcher to the Institute. He's from UC Berkeley's Center for Human-compatible AI; an organization founded by ardent x-risk evangelist, Slaughterbots creator, and computer scientist Stuart J. Russell. CHAI has received millions in funding from Open Philanthropy and its affiliate Good Ventures.

SUMMARY

Effective Altruism is leveraging a massive budget to evangelize its message of the dangers of a Superhuman Artificial Intelligence in order to get a seat at the government table. Since the danger is fictional, not factual, the EA / x-risk lobby can only hurt the AI industry's overall effort to inform legislators about authentic problems and how to address them by being a confusing distraction.

RECOMMENDED READING

Helfrich, G. The harms of terminology: why we should reject so-called “frontier AI”. AI Ethics (2024). https://doi.org/10.1007/s43681-024-00438-1

Westerstrand, S., Westerstrand, R. & Koskinen, J. Talking existential risk into being: a Habermasian critical discourse perspective to AI hype. AI Ethics (2024). https://doi.org/10.1007/s43681-024-00464-z

Member discussion